OpenAI’s GPT-4 is the latest version of their text generation model.

By utilizing their API, you can access the power of GPT-4 in your own Python applications.

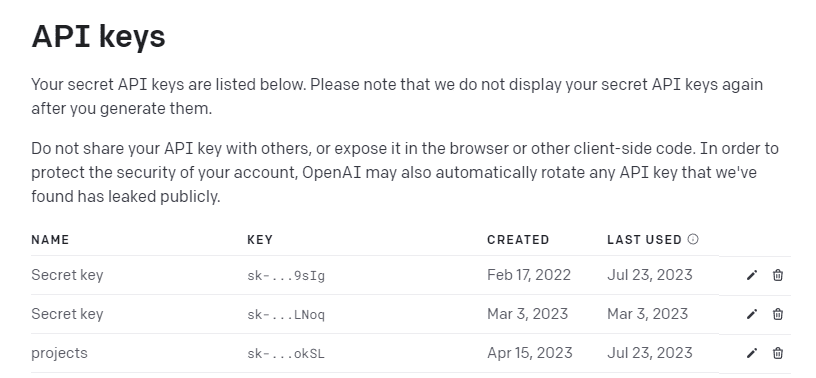

Obtaining API Access

To use any of OpenAI’s models, you must first obtain an API access key.

Go to https://openai.com/api/ and click on the “Signup” button.

You will be prompted to create an account. Verify your email to complete the signup process.

Once logged in, you can find your API keys under the account dashboard.

Be sure to keep your keys private, as anyone with access can use your OpenAI quota, and spend the credits you paid for.

Installing Required Libraries

To use the OpenAI API from Python, you need to install the OpenAI Python library. This can easily be done using pip command in the terminal:

pip install openai

This will download and install the required package.

You also need to import the open module at the top of your Python code:

import openai

Making Your First API Call

Once you have obtained your API key and installed the library, you can make your first API call.

First, load your key into the Python environment:

openai.api_key = "YOUR_API_KEY"

Replace YOUR_API_KEY with your actual API secret key.

You can now use the various OpenAI classes and functions to call the API. GPT-4 is accessed through the Completion endpoint.

Let’s try a simple text completion example:

response = openai.chat.completions.create(

model="gpt-4", messages=[{"role": "user", "content": "Generate a 3 sentence story about friendship"}]

)

print(response)This will prompt GPT-4 to generate text based on your prompt.

The response will contain the generated text, along with other metadata. To access just the text, use:

print(response.choices[0].message.content)

Here is the full script. Try it directly here:

This should give you a basic API call workflow. From here, you can start experimenting with text generation using GPT-4!

Fundamentals of GPT-4 Programming

Now that you can make basic calls to the GPT-4 API, let’s go over some core programming concepts to help you generate high-quality responses for your applications.

GPT-4 exposes a number of parameters you can tweak to control the nature of generated responses:

- Temperature: Controls randomness; high values mean more randomness.

- Top_p: Controls likelihood of unlikely tokens.

- Frequency_penalty: Reduces repetitive phrases.

- Presence_penalty: Penalizes new tokens based on whether they appear in the prompt.

It takes experimentation to find the right balance for high-quality outputs. and this is your role as a prompt engineer, To Experiment!

The way you craft the prompt plays a major role in the API’s response. Here are some best practices:

- Provide examples of desired tone/formatting

- Simplify complex prompts into smaller steps

- Use clear, concrete language

- Prime GPT-4 with some initial chat to settle into conversational mode

Taking the time to engineer quality prompts will dramatically improve results.

Anyway, let’s see an example of how to use these parameters in our Python scripts:

import openai

openai.api_key = "sk-XXX"

response = openai.chat.completions.create(

model="gpt-4",

temperature=0.9,

top_p=0.3

messages=

[{

"role": "user",

"content": "Generate a 3 sentence story about friendship"

}]

)

print(response.choices[0].message.content)You can also generate multiple results by using the “n” parameter. Here is an example you can try out:

Streamed Completions

For long responses, use the stream Parameter to process the output incrementally:

Try out this:

Script Explained:

- Chat Creation: The

openai.ChatCompletion.createthe function is called with several arguments:model="gpt-4"Specifies that the GPT-4 model should be used.stream=TrueIndicates that the response should be streamed (i.e., sent in parts as they become available instead of all at once when the computation is finished).

- Processing the Response: The response from the OpenAI API is iterable (due to

stream=True), so you can iterate over it with aforloop (for chunk in response). In each iterationchunkrepresents a piece of the response. - Checking and Printing the Content: Within the loop, it checks if the ‘content’ field exists in

chunk.choices[0].delta. If such a field exists, it prints its value withprint(chunk.choices[0].delta.content,end=""). Theend=""the argument tells the print function not to add a new line at the end so that consecutive prints will appear on the same line.

This streaming option enables applications like live transcriptions. Or give you the effect of being a ChatGPT Typewriter. You can find more details here.

Can you make a video about it

I will try my best

Pingback: How To Create AI Tools With WordPress in 5 Minutes! -

Pingback: Affiliate Marketing With AI - A New 2024 Strategy To Try!

Pingback: Optimize Landing Pages With AI (Free Script Inside)